You have worked with the team/client to identify survey goals, written clear and concise questions, confirmed that question order is appropriate to not bias results, and located potential respondents to interview. You are almost ready to start collecting valuable data. But wait, don’t forget to thoroughly test your survey before you hit that start button!

Testing an electronic survey involves more than proofreading for grammar and content; it is a detailed process that can dramatically impact project results. Check out these survey testing tips so you can head off potential problems.

Read your survey out loud and simulate taking the survey before programming

I read this tip in an article years ago and continue to use for all my surveys, whether or not they are electronic. Most online survey programming tools provide an estimate of how long it will take to complete the survey, but keep in mind this is just an estimate. If you are going to mention survey length, you should be confident in the time promised. Reading the survey out loud before it is programmed may sound like a strange step, but it takes longer to read text aloud than it does to read it silently. You should also simulate survey actions (e.g. ranking attributes via onscreen sliding, typing a response to open-ended questions, or similar actions). If you can read the entire survey out loud and act out necessary actions in 10 minutes, then you can be confident the survey will be completed in less than 10 minutes.

Test your survey with and without survey logic

Including survey logic (also known as questionnaire branching) is a great way to simplify the survey-taking process by showing respondents only questions that are relevant to them. You can skip questions, entire survey sections, or disqualify respondents based on their responses. Tailoring questions to the respondent can lead to better engagement because the respondent feels more involved in the process.

While goals like shortening questionnaire length and decreasing respondent abandonment are good, survey logic creates a higher level of complexity and a greater chance of programming error. That means you will likely want to test the survey with and without logic in place. Ideally, you will do your initial testing prior to adding any logic (particularly for high complexity questionnaires). It may sound like an extraneous step, but finding errors after logic is included can have a cascading effect. After logic is included, small changes can impact how the survey branches in unexpected ways. It’s usually easier to make changes that impact branching and move questions in the survey around before adding survey logic. Once logic is included you should continue to test question order and wording, especially when logic impacts which questions a respondent receives.

Test your survey in multiple browsers and devices

You cannot control which web browser a respondent will use for the survey. We consider it a best practice to test the survey across multiple browsers to guarantee respondents get the best experience and can navigate properly. According to Statcounter GlobalStats, across all devices, desktop and mobile, the most popular U.S. browsers in order are Chrome, Safari, Firefox, Edge, and Internet Explorer. You should test the survey in each browser to confirm question layout and text legibility.

While desktop and laptop browsers are still dominant, mobile devices (smartphones and tablets) are becoming more popular for taking electronic surveys. A recent research study presented in Quirk’s Magazine shows that 39% of a nationally representative U.S. sample elected to complete their survey using a mobile device. That percentage is even higher among Hispanics (49%), and African Americans (48%). The study also shows that using a smartphone to complete the survey skews toward younger and more affluent consumers (groups that are often of interest to marketers). It is essential that survey testing includes desktop, tablet, and smartphone devices.

Have someone outside the project complete the survey

Up to this point, you and the stakeholders may be the only people with exposure to the survey. Chances are you are so close to the project you can probably recite the questions and options in your sleep. This makes it the perfect time for someone else to examine the survey. There are usually limitations to the people with whom you can share surveys due to security and privacy concerns. If possible, find someone from your team who is not familiar with the survey and have them take it as though they were a real respondent.

Have stakeholders take the survey

No doubt stakeholders have been involved with your survey before its completion. Now that the electronic survey is designed and built, it’s time to further engage stakeholders to make sure the survey is in line with their expectations. Stakeholders may have forgotten early planning meetings, so make sure to remind them of survey goals.

Caution: This can be a time when stakeholders request that more questions be added to the survey. Adding questions requires going through steps 1-5 again, so only add questions that are in line with survey goals and can lead to actionable results. If a lot of additions are requested, it may be a sign that a follow-up project is appropriate.

Test data reports

Now that the survey is ready there is another item to test: data reporting.

You have planned the questions you want to ask, but how will the data look (e.g. data exports, summary reports, crosstabs, etc.)? It is important that the data be in a convenient format and easy to analyze. A good plan is to run dummy data with hundreds or even thousands of faux completes. In fact, most programming tools have this option. By using this test data, you can tweak questions, add validation, and change data formats as needed to help with analysis.

Begin with a “slow start”

When the time comes to finally hit that start button, begin fielding with a subset of total invites. For example, our team recommends sending 5% – 10% or so. This step will add time to fielding but it is the last chance to confirm that links are working correctly, data reports are satisfactory, there are no snags in the survey, etc. If there are no issues, these upfront responses can be folded into the final data.

The key takeaway is to test, test, and then retest again. Your diligence will be rewarded with a successful study.

Related blogs

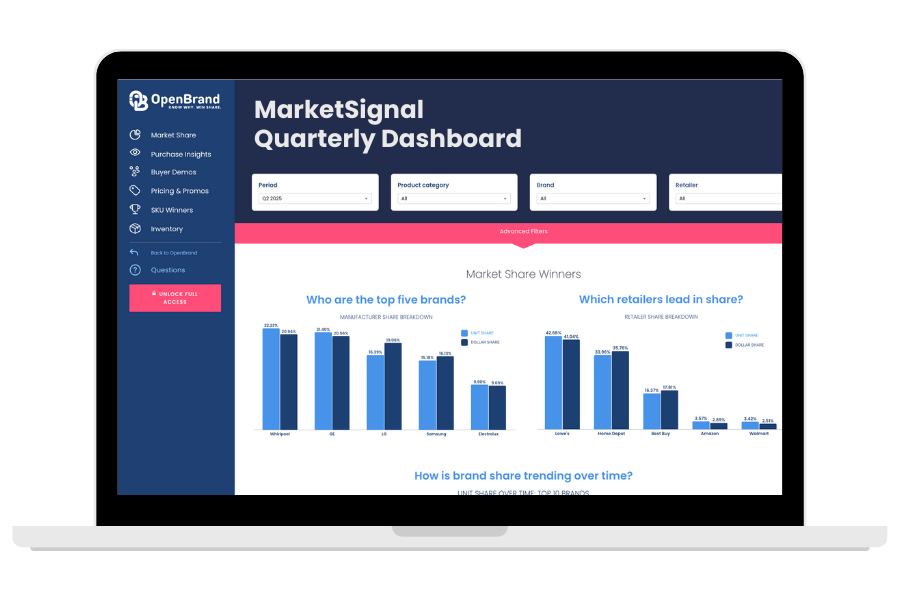

Explore Our Data

Free Quarterly Dashboards

Explore category-level data dashboards, built to help you track category leaders, pricing dynamics, and consumer demand – with no subscription or fee.

Related blogs

Consumer Price Index: Durable and Personal Goods | January 2026

This is the February 2026 release of the OpenBrand Consumer Price Index (CPI) – Durable and…

Business Printer Hardware: 2025 Year-In-Review

Our Business Printers: Printer Hardware 2025 Year-in-Review report recaps product launch activity,…

Headphones: 2025 Year-In-Review

Our Headphones: 2025 Year-in-Review report recaps launches, placements, pricing and advertising and…

Refrigerators: 2025 Year-In-Review

Our Refrigerators: 2025 Year-in-Review report recaps product launches, placements, pricing and…